Why is big data important? Big data analysis is carried out in order to obtain new, previously unknown information and it is a crucial concept chaninging many approaches and spheres of our life….

So, what is Big Data?

Big data is structured and unstructured data of huge volumes and diversity, as well as methods for processing them, which allow distributed analysis of information. The term Big Data appeared in 2008. It was first used by the editor of Nature magazine, Clifford Lynch. He spoke about the explosive growth of world information and noted that new tools and more advanced technologies will help to master and control them.

In simple words, big data is a common name for large data sets and methods of processing them. Such data is effectively processed using scalable software tools that appeared in the late 2000s and have become an alternative to traditional databases and Business Intelligence solutions.

What Does https Mean? Https Protocol in Simple Words

What are the main characteristics of big data comparing to traditional analysis aproaches:

- Processing immediately the entire array of available data

- Data is processed in its original form.

- Search for correlations for all data before obtaining the required information.

- Real-time analysis and processing of big data as it arrives.

Big Data Characteristics

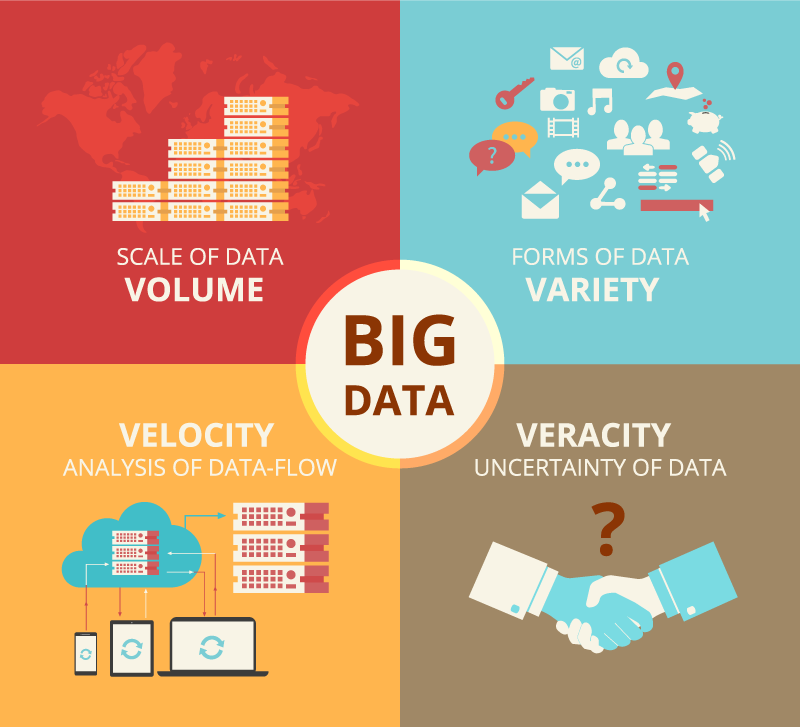

When talking about Big Data, the VVV rule is mentioned – three attributes or properties that big data should have:

Volume – volume (data is measured by the value of the physical volume of documents).

Velocity – data is regularly updated, which requires constant processing.

Variety – diverse data can have heterogeneous formats, be unstructured or partially structured.

What profession should I choose: Jobs of the Future

Big Data Functions:

When answering the question why is big data important one shouldn’t forget about big data main functions:

Data mining – the process of processing and structuring data, the stage of analytics to identify patterns. (Structuring diverse information, searching for hidden and unobvious connections to bring to a common denominator)

Machine learning – a machine learning process based on discovered relationships in the analysis process (Analytics and forecasting based on processed and structured information).

Who invented the first car: 2 German Competitors

In 2007, a new type of machine learning became popular – Deep learning. It allowed to improve neural networks to the level of limited artificial intelligence. In ordinary machine learning, the computer gained experience through the examples of a programmer, while in Deep Learning, the system itself creates multilevel calculations and draws conclusions.

Big data sources include:

Internet – social networks, blogs, media, forums, sites, Internet of things (IoT).

Corporate data – transactional business information, archives, databases.

Indications of devices – sensors, instruments, as well as meteorological data, cellular data, etc.

For proper functioning, the big data system must be based on certain principles:

Horizontal scalability – any system that processes big data must be extensible. If the data volume grows by 2 times, then the number of servers in the cluster should also be increased by 2 times.

The best Coursera courses in IT and digital industry: blockchain, deap learning and machine learning

Fault tolerance is a necessary condition for a large number of machines that will inevitably fail.

Locality of data – to reduce costs, data must be processed on the same server where it is stored.

WHAT IS COMPUTER VIRUS? DANGEROUS INHABITANTS OF DIGITAL WORLD

Why is big data important: big data application

Most actively, big data is used in the financial and medical sectors, high-tech and Internet companies, as well as in the public sector.

Big data in business

Everyone who deals with big data can be divided into several groups:

Infrastructure providers – solve the problems of data storage and preprocessing (For example: IBM, Microsoft, Oracle, Sap and others).

Dataminers are developers of algorithms that help customers retrieve valuable information. Among them: Yandex Data Factory, Algomost, Glowbyte Consulting, CleverData, etc.

- System integrators are companies that implement client-side big data analysis systems . For example: Force, Krok, etc.

- Consumers are companies that buy hardware and software systems and order algorithms from consultants.

- Ready-made service developers – offer turnkey solutions based on access to big data. They open Big Data to a wide range of users.

The main suppliers of big data are search engines. They have access to data arrays, and in addition, they have sufficient technological base to create new services.

What are the types of web technologies?

Big Data in Marketing

Analysis of the information about the company opens up new possibilities:

- Understand the work of a business in numbers.

- Explore competitors.

- Get to know your customers.

Marketing will be able to reach a new level of understanding and analytics, which will reduce costs and increase sales.

Do you know who invented x-rays?

Source: www.uplab.ru/